SHAD3S: A model to Sketch, Shade and Shadow

Raghav BV, Abhishek Joshi, Saisha Narang, Vinay P Namboodiri

(PDF) (arXiv) (code) (data) (brushes) (trained models) (data synthesis)

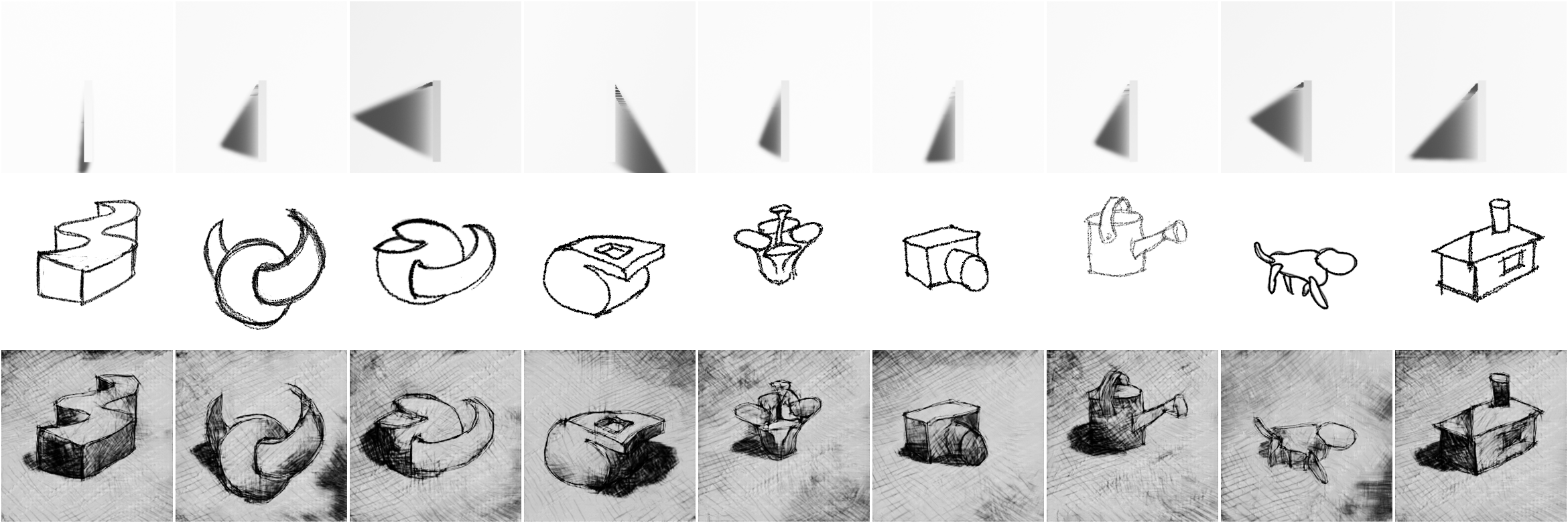

Figure 1: SHAD3S : a Model for Sketch, Shade and Shadow — is a completion framework that provides realistic hatching for an input line drawn sketch that is consistent with the underlying 3D and a user specified illumination. The figure above shows choice of illumination conditions on the top row, user sketches in the middle row, and the completion suggestions by our system. Note that the wide variety of shapes drawn by the user, as well as the wide varied to brush styles used by the user were not available in the training set.

- How to cite

@inproceedings{RJNN21, title = {{{SHAD3S}}: {{A}} Model to {{Sketch}}, {{Shade}} and {{Shadow}}}, shorttitle = {{{SHAD3S}}}, author = {Venkataramaiyer, Raghav B. and Joshi, Abhishek and Narang, Saisha and Namboodiri, Vinay P.}, booktitle = {Proceedings 27th {Winter} {Conference} on {Applications} of {Computer} {Vision} ({WACV})}, year = {2021}, }

- Abstract

Hatching is a common method used by artists to accentuate the third dimension of a sketch, and to illuminate the scene. Our system attempts to compete with a human at hatching generic three-dimensional (3d) shapes, and also tries to assist her in a form exploration exercise. The novelty of our approach lies in the fact that we make no assumptions about the input other than that it represents a 3d shape, and yet, given a contextual information of illumination and texture, we synthesise an accurate hatch pattern over the sketch, without access to 3d or pseudo 3d. In the process, we contribute towards a) a cheap yet effective method to synthesise a sufficiently large high fidelity dataset, pertinent to task; b) creating a pipeline with conditional generative adversarial network (cgan); and c) creating an interactive utility with gimp, that is a tool for artists to engage with automated hatching or a form-exploration exercise. User evaluation of the tool suggests that the model performance does generalise satisfactorily over diverse input, both in terms of style as well as shape. A simple comparison of inception scores suggest that the generated distribution is as diverse as the ground truth.

- The Mathematical Model

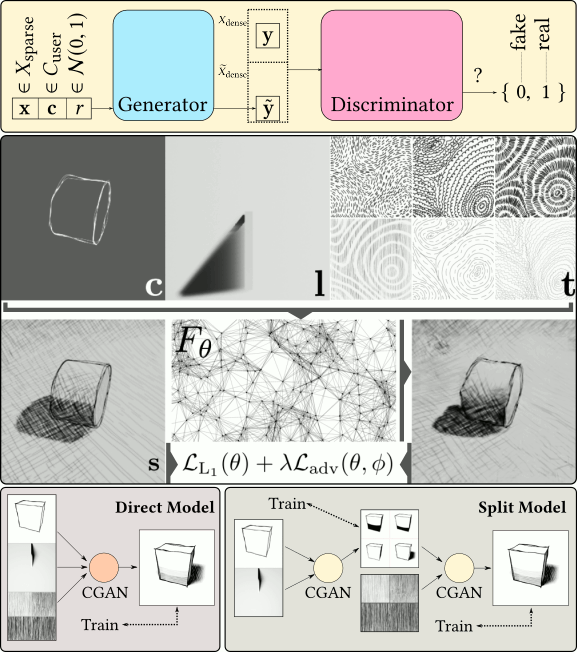

We use the cgan framework, to pose this as a problem of learning the distribution of pixel responses conditioned on its neighbourhood patch within multiple modalities of input, namely the contour, illumination and textures.

Figure 2: The overview of the our framework. Clockwise from top. Illustration of structure of gan detailing injection of data from different modalities into the cgan framework; Further detailed view of direct model; Coarse sketch of a split model; Analogous sketch of a direct model.

- Dataset

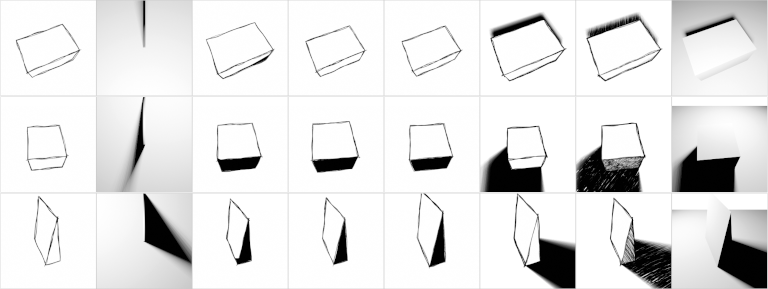

We introduce the shad3s dataset, that for a given contour representation of a mesh, under a given illumination condition, provides the illumination masks on the object, a shadow mask on the ground, its diffuse and sketch renders.

Figure 3: Few examples from the dataset. Columns left to right. cnt: Contour; ill: Illumination; hi: Highlight mask; mid: Midtone mask; sha: Shade mask (on object); shw: Shadow mask; sk: Sketch render; dif: Diffuse render.

- Qualitative Results

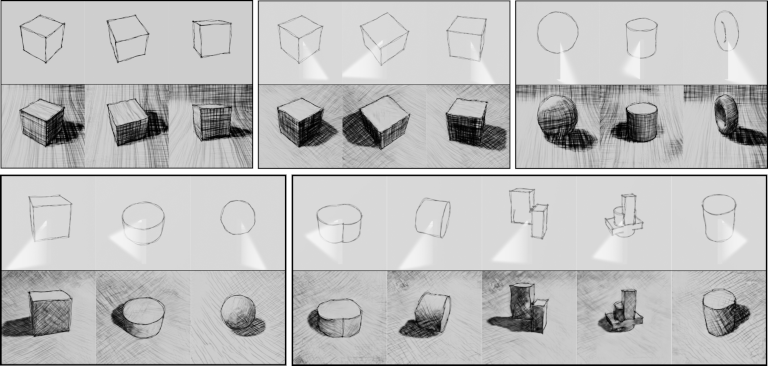

Here is a progressing evaluation of our model using a dataset with background.

Figure 4: Progressive evaluation of our model with varying camera pose, illumination, constituent geometry and texture. Clockwise from top left. pose; pose+lit; pose+lit+shap; all; txr